Prevent Docker host to run out of disk space

Introduction

Running out of disk space on your Docker host is a common problem. It can cause containers and their services to hang and become unresponsive, and it can also prevent you from creating new containers or scaling existing ones. Worst case, your root filesystem runs out of space and you have serious issues recovering.

Fortunately, there are several ways to prevent this from happening.

Prevent Docker host disk space exhaustion

First, you need to check the disk space on your Docker host. You can do this via the command line:

df -h

or df -t ext4 if you only want to show a specific file system type, like here ext4

You should see all your filesystems and their space usage.

Filesystem 1K-blocks Used Available Use% Mounted on/dev/nvme0n1p1 474755104 207145408 243420000 46% /

Check the disk space on your Docker host.

To get details about the Docker usage itself, you can run:

docker system df -h

You should see something like this:

❯ docker system dfTYPE TOTAL ACTIVE SIZE RECLAIMABLEImages 7 4 2.187GB 867.3MB (39%)Containers 6 0 118.7MB 118.7MB (100%)Local Volumes 1560 1 39.24GB 39.16GB (99%)Build Cache 83 0 3.666GB 3.666GB

This command will list all of the containers, images and volumes on your Docker host.

For an extensive list of information that is machine readable you can also set a different output format:

docker system df -v --format ""

The best prevention is to monitor the disk space on your Docker host and to alert based on it. Opvizor gives you all features you need with the Windows, Linux, or Docker extension.

Clean up container logs

To view the logs of a running container, use the docker logs command. You can specify a particular container name or all containers by using the -a flag.

When running a docker logs container command, the log entries shown depend on the log rotation and the runtime of the container. As these log outputs can be huge, you should always limit the number of lines shown by adding --tail=100 or something similar.

docker logs --tail=100 mycontainer

These are accessed in a JSON format and record the standard output (and error) for every container you run—if you don't delete them frequently, they can go up from anywhere between 10 and 100 GB or more!

Nevertheless, it is easy to guess, that all that log lines are stored somewhere on disk and as already mentioned they can use massive amounts of disk space over time. Cleaning them up makes a lot of sense.

Docker Container Log Files deletion can be done in several ways:

- Manually

- using a crontab cleanup job

- Using logrotate

- Build-in Docker logrotate functionality

Manual cleanup of log files

As the log files can be stored in different folders or mount points based on the system setup, the safest way is to use docker inspect to detect the log file path. The following command deletes all log files for the container mycontainer.

sudo truncate -s 0 $(docker inspect --format='' mycontainer)

or you can use

truncate -s 0 /var/lib/docker/containers/**/*-json.log

or as root:

sudo sh -c "truncate -s 0 /var/lib/docker/containers/**/*-json.log"

It can happen that your Docker log file location is different, then use first the docker system info command to find the Root directory.

docker system info | grep "Docker Root Dir";

when you're looking for an approach for all container logs in one including detecting the correct log file location:

sudo sh -c 'truncate -s 0 $(docker system info | grep "Docker Root Dir" | cut -d ":" -f2 | cut -d " " -f2-)/containers/*/*-json.log';

crontab cleanup of log files

To configure an automatic cleanup using crontab, do the following.

Switch to root if needed

sudo -s

Create a file for daily cleanup of your Container logs using crontab:

cat <<EOF >> /etc/cron.daily/clear-docker-logs#!/bin/shtruncate -s 0 /var/lib/docker/containers/**/*-json.logEOF

or if you prefer the universal option:

cat <<EOF >> /etc/cron.daily/clear-docker-logs#!/bin/shtruncate -s 0 $(docker system info | grep "Docker Root Dir" | cut -d ":" -f2 | cut -d " " -f2-)/containers/*/*-json.logEOF

make sure the script can be executed:

chmod +x /etc/cron.daily/clear-docker-logs

From now on, the script cleans all logs every day.

Btw. you can forward the container logs to Opvizor as well, so you don't lose the logs and have them fully searchable and actionable (dashboard, alerts).

Configure log rotation

Docker provides two methods to help us with this issue: log rotation and configurable limits for containers.

Log rotation occurs every time you run your containers; it's a process of archiving container logs so that they don't accumulate on your host machine. You can configure log rotation in two ways.

- Using logrotate

- Build-in Docker logrotate functionality

Logrotate

To create a new logrotate config file for your Docker containers in the logrotate folder /etc/logrotate.d/logrotate-docker and put the following configuration to it:

/var/lib/docker/containers/*/*.log { rotate 7 daily compress missingok delaycompress copytruncate}

Important: Double-check the log file location using and change the logrotate config if needed:

docker system info | grep "Docker Root Dir";

Now you can test your logrotation: logrotate -fv /etc/logrotate.d/logrotate-docker

By default, the installation of logrotate creates a crontab file inside /etc/cron.daily named logrotate, so there is no need to configure anything else.

Build-in Docker Logrotate

Since Docker 1.8+, you can use the built-in logrotate functionality that is based on the logging driver and enables automatic log rotation.

To configure log rotation for all container, set the log-driver and log-opts values in the daemon.json file, which is located in /etc/docker/ on Linux hosts or C:\ProgramData\docker\config\ on Microsoft Windows hosts.

{

"log-driver": "json-file",

"log-opts": {

"max-size": "10m",

"max-file": "3"

}

}

Make sure to restart the docker daemon or the host after making these changes.

Clean up wasted containers, images and volumes

Luckily there are simple ways to clean up containers, images and volumes.

Clean up <none> Tag images

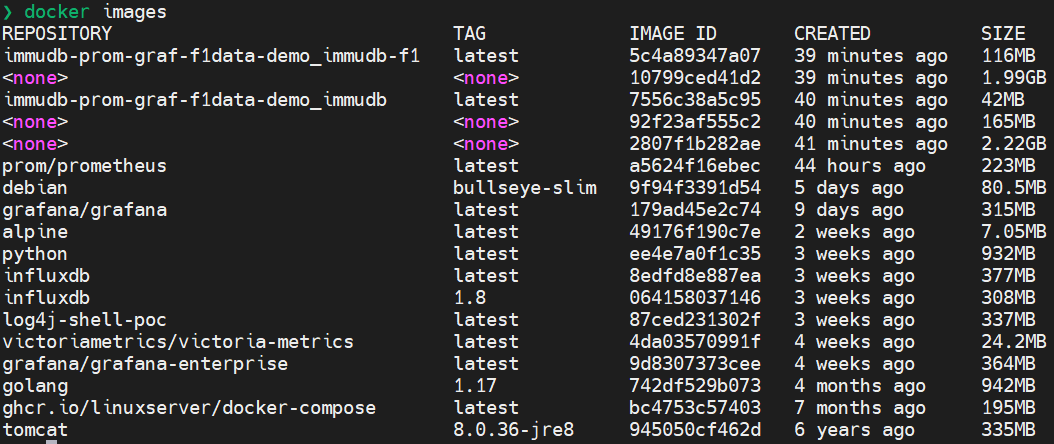

When running the docker images command you get the local cached images and their size.

to only check for images with the <none> tag, you can run

docker images -f "dangling=true"

REPOSITORY TAG IMAGE ID CREATED SIZE

<none> <none> 10799ced41d2 38 minutes ago 1.99GB

<none> <none> 92f23af555c2 39 minutes ago 165MB

<none> <none> 2807f1b282ae 39 minutes ago 2.22GB

These images can eat up a log of disk space but are normally only temporarily used to build other images. In most cases, these are no longer needed after the final image has been built and tagged.

You can easily delete them:

docker rmi $(docker images -f "dangling=true" -q)

Most updated Docker installations also support a simple docker command to get rid of these images:

docker image prune

or to automate it docker image prune --force

Creating a crontab job again, would look like:

sudo -s

cat <<EOF >> /etc/cron.daily/delete-none-images

#!/bin/sh

docker image prune --force

EOF

chmod +x /etc/cron.daily/delete-none-images

Hardcore cleanup

To also cleanup unused (not-running) container, images and volumes, there is a hardcore command, called prune for all assets. BE CAREFUL WITH THESE COMMANDS - ALL INACTIVE ASSETS ARE BEING DELETED!

docker container prune

docker image prune

docker volume prune

docker network prune

and to run it automatically -- force as an option:

docker image prune --force

To cleanup everything above, the hardcore cleanup command is

docker system prune

and to prune also volumes

docker system prune --volumes

Filter by date

All of the prune commands have the option of an inactive date, that is very useful as it only deleted resources not used for the given timerange - --filter "until=24h"

docker container prune --filter "until=24h"

docker image prune --filter "until=24h"

docker volume prune --filter "until=24h"

docker network prune --filter "until=24h"

Conclusion

If your host is running out of disk space, you have a few options. You can configure Docker or your OS to rotate its logs on a daily basis. You can clear up unused containers, images and volumes to free up space.

Lastly, if none of these methods work for you then consider upgrading your storage capacity so that it meets your needs as an application developer or system administrator.

Remember to always monitor your free space before it is too late and you need to rescue your system. Prevention is always cheaper than recovery: